The training scripts are modfied from the CVND---Gesture-Recognition repositoy. The original training scripts are intended to serve as a starting point for 3D CNN gesture recognition training using the Jester dataset. The training codes are modified to train 2D CNN with temporal shift module. The 2D CNN backbone in this project is mobilenetV2_tsm, which is adapted from the paper TSM: Temporal Shift Module for Efficient Video Understanding. (Their gitub link)

-

Download the Jester gesture recoginition dataset. (Available in Kaggle)

-

Put the video files in the chosen path.

-

change the training config inside the configs directory.

-

start training by running the following command:

python train.py --config configs/config.json -g 0 -

To start from a checkpoint, run: 'python train.py --config configs/config.json -g 0 -r True'

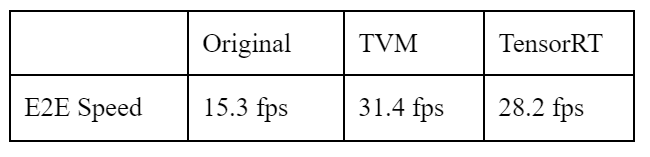

For Jetson Nano 2GB:

-

Prerequisite: to run the original version, only

torchis needed. To run the TVM version, you need to install theTVM,onnx,onnx-simplifierlibrary. To run the TensorRT version, you need to install thetorch2trtlibrary. -

change the host IP address inside the inference function.

-

run the inference notebook on the edge.

-

run the remote_control notebook on your PC.

Drumming Finger

Stop Sign

Thumb Up

Thumb Down

Swiping Left

Swiping Right

Zooming In With Full Hand

Zooming Out With Full Hand

Zooming In With Two Fingers

Zooming Out With Two Fingers

Shaking Hand