AI Gaussian Splatting World Generator - Transform text prompts into explorable 3D worlds

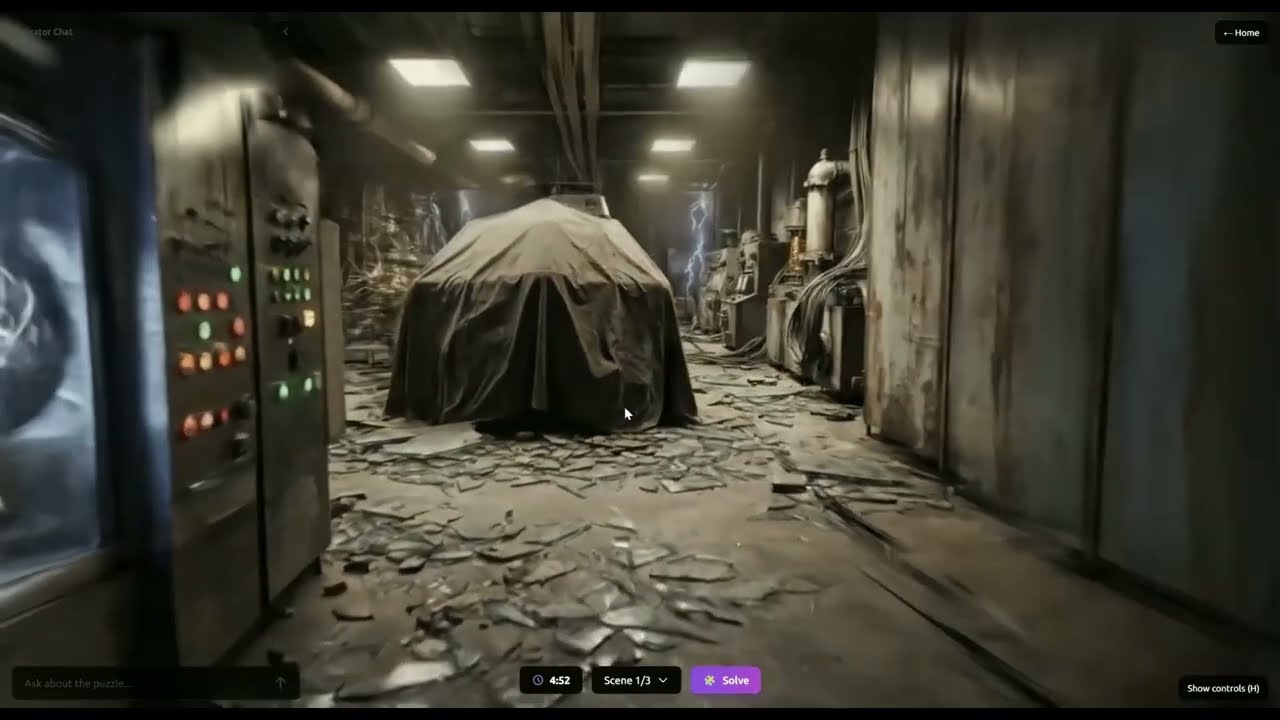

Venture is an AI-powered 3D world generator that leverages cutting-edge Gaussian Splatting technology to create immersive 3D environments from simple text descriptions.

- 🎥 AI Video Generation (Runway veo3.1) - Generate cinematic footage from text

- 🌐 Gaussian Splatting (Vid2Scene) - Convert video into photorealistic 3D worlds

- 🎮 Interactive 3D Viewer (Three.js) - Explore generated worlds in real-time

- 🤖 Multi-Agent Orchestration (OpenAI GPT-4) - Intelligent world creation pipeline

- 🧠 AI-Generated Narratives - Optional storylines and puzzles

- 🎙️ Voice Narration - Immersive audio storytelling (ElevenLabs)

- 💬 Interactive Chat - Dynamic guidance and hints

Text Prompt → AI Video (Runway) → 3D Reconstruction (Vid2Scene)

→ Gaussian Splat (.ply) → Real-time 3D Viewer (Three.js)

-

🎬 AI Video Generation (Runway veo3.1)

- Input: Text description (e.g., "Victorian mansion library")

- Output: 8-16 second cinematic video

- Time: ~2-3 minutes

-

🌐 3D Reconstruction (Vid2Scene Gaussian Splatting)

- Input: Generated video

- Processing: Cloud-based neural reconstruction

- Output: High-quality .ply Gaussian Splat file

- Time: ~10-20 minutes

-

🎮 Interactive Viewer (Three.js + Custom Renderer)

- Loads .ply Gaussian Splat

- Real-time navigation (WASD controls)

- Photorealistic rendering at 60fps

-

📝 Optional Enhancement (AI Agents)

- Story generation, puzzles, voice narration

- Time: ~1 minute

Total Generation Time : ~15-25 minutes per world

📖 Complete Technical Documentation

Venture uses a multi-agent system to intelligently orchestrate the world generation pipeline:

graph TD

A[User Text Prompt] --> B[Orchestrator Agent]

B --> C{World Generation Pipeline}

C --> D[Prompt Enhancer]

D --> E[Runway API]

E --> F[Generated Video]

F --> G[Frame Processor]

G --> H[Vid2Scene API]

H --> I[Gaussian Splat .ply]

C --> J[Optional: Content Agent]

J --> K[Story Generator]

J --> L[Audio Generator]

I --> M[3D World Viewer]

K -.-> M

L -.-> M

M --> N[Explorable 3D World]

N --> O[Optional: Chat Agent]

O --> P[Context-Aware Assistance]

style B fill:#ff6b6b

style H fill:#4ecdc4

style M fill:#45b7d1

style I fill:#96ceb4

| Agent | Purpose | Technology |

|---|---|---|

| Orchestrator Agent | Manages entire world generation pipeline, coordinates API calls | OpenAI GPT-4 |

| Prompt Enhancer | Optimizes user prompts for better video generation results | GPT-4 + Prompt Engineering |

| Frame Processor | Extracts and prepares video frames for 3D reconstruction | FFmpeg + Custom Logic |

| Content Agent (Optional) | Generates narratives, puzzles, and audio for enhanced experiences | GPT-4 + ElevenLabs |

| Chat Agent (Optional) | Provides context-aware assistance during world exploration | GPT-4 + State Management |

- 🎯 Intelligent Prompt Enhancement: Automatically optimizes descriptions for better 3D results

- ⚡ Pipeline Automation: Fully automated from text to explorable 3D world

- 🎨 Photorealistic Quality: Gaussian Splatting produces cinema-quality 3D environments

- 🚀 Real-time Navigation: Smooth 60fps exploration with WASD controls

- 🔧 Modular Architecture: Optional narrative/audio layers for enhanced experiences

World Generation Flow:

┌─────────────────────────────────────────────────────────────┐

│ 1. USER INPUT │

│ "A mysterious ancient library with floating books" │

└────────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────────┐

│ 2. ORCHESTRATOR AGENT │

│ • Validates prompt │

│ • Initiates generation pipeline │

│ • Monitors progress across all steps │

└────────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────────┐

│ 3. PROMPT ENHANCER │

│ Enhanced: "Cinematic shot: ancient library interior, │

│ floating leather-bound books, magical atmosphere, │

│ warm candlelight, photorealistic, 8K quality" │

└────────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────────┐

│ 4. RUNWAY VIDEO GENERATION │

│ • API call with enhanced prompt │

│ • 8-16 second cinematic video │

│ • Status: Polling until complete (~2-3 min) │

└────────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────────┐

│ 5. FRAME PROCESSOR │

│ • Extract 64 frames from video (FFmpeg) │

│ • Optimize resolution for Vid2Scene │

│ • Prepare upload package │

└────────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────────┐

│ 6. VID2SCENE 3D RECONSTRUCTION │

│ • Upload frames to cloud │

│ • Gaussian Splatting neural reconstruction │

│ • Download .ply file (~10-20 min) │

└────────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────────┐

│ 7. 3D WORLD READY │

│ • Gaussian Splat loaded in viewer │

│ • Real-time navigation enabled │

│ • Optional: Add narrative/audio layer │

└─────────────────────────────────────────────────────────────┘

Pipeline State Management:

Each generation job tracks:

- Generation Status: queued → video → processing → reconstruction → complete

- Asset Locations: video path, frames directory, .ply file location

- Quality Metrics: Frame count, splat point density, rendering performance

- Optional Content: Narrative data, audio files, interaction points

- Node.js >= 18.0.0

- FFmpeg installed on your system

- Required API Keys:

- Runway (video generation)

- Vid2Scene Enterprise (Gaussian Splatting) ⭐ REQUIRED

- OpenAI (storyline & agents)

- ElevenLabs (audio - optional in dev)

# 1. Install dependencies

npm install

# 2. Install FFmpeg (if not already installed)

# macOS

brew install ffmpeg

# Ubuntu/Debian

sudo apt-get install ffmpeg

# Windows

# Download from https://ffmpeg.org/download.html

# Or use: winget install ffmpeg

# 3. Configure environment variables

# Create .env.local at the root with:

# - RUNWAY_API_KEY=...

# - VID2SCENE_API_KEY=... (Enterprise account required)

# - OPENAI_API_KEY=...

# - ELEVENLABS_API_KEY=... (optional)

# 4. Create storage directories

mkdir -p storage/{videos,frames,splats,audio,thumbnails}

# 5. Start development server

npm run devventure/

├── src/

│ ├── app/ # Next.js App Router

│ │ ├── page.tsx # Landing page & world generator

│ │ ├── experience/[id]/ # 3D World viewer

│ │ └── api/ # Backend API routes

│ │ ├── generate/ # World generation pipeline

│ │ ├── chat/ # Optional chat assistance

│ │ └── enhance/ # Optional content enhancement

│ ├── components/ # React components

│ │ ├── GaussianSplatViewer*.tsx # 3D Gaussian Splat rendering

│ │ ├── ProgressTracker.tsx # Generation progress UI

│ │ └── EnigmaChat.tsx # Optional chat interface

│ ├── lib/ # Core pipeline logic

│ │ ├── services/ # External API integrations

│ │ │ ├── runway-service.ts # Runway video generation

│ │ │ ├── vid2scene-service.ts # Vid2Scene 3D reconstruction

│ │ │ ├── game-agent.ts # Pipeline orchestration

│ │ │ ├── context-agent.ts # Optional: Content generation

│ │ │ └── elevenlabs-service.ts # Optional: TTS

│ │ └── processors/ # Media processing

│ │ ├── video-processor.ts # FFmpeg frame extraction

│ │ └── thumbnail-generator.ts # Preview generation

│ ├── types/ # TypeScript types

│ │ ├── experience.ts # World data models

│ │ └── api.ts # API interfaces

│ └── utils/ # Utilities & helpers

├── storage/ # Generated assets

│ ├── videos/ # Runway video outputs

│ ├── frames/ # Extracted video frames

│ ├── splats/ # Gaussian Splat .ply files ⭐

│ ├── audio/ # Optional: Voice narration

│ └── thumbnails/ # World preview images

└── public/ # Static assets

| Service | Purpose | Documentation |

|---|---|---|

| Runway veo3.1 ⭐ | AI video generation from text (8-16s cinematic footage) | docs |

| Vid2Scene ⭐ | Neural 3D Reconstruction via Gaussian Splatting | docs |

| FFmpeg | Video frame extraction and processing | docs |

| Three.js | Real-time Gaussian Splat rendering | docs |

| Service | Purpose | Documentation |

|---|---|---|

| OpenAI GPT-4 | Prompt enhancement, narrative generation, chat assistance | docs |

| ElevenLabs | Voice narration (Text-to-Speech) | docs |

⚠️ Vid2Scene requires an Enterprise account to access the API

⭐ Core technologies are essential for world generation

-

Enter a description:

- Examples:

- "A cyberpunk street at night with neon signs"

- "Medieval castle throne room"

- "Futuristic space station interior"

- Examples:

-

Automatic world generation:

- 🎬 AI Video Generation: 2-3 min

- 🌐 3D Gaussian Splatting: 15-20 min

- ✅ Total: ~20 minutes to explorable 3D world

-

Explore your world:

- Navigate in real-time with photorealistic quality

- 60fps smooth rendering

- Fully explorable environment

- WASD / Arrow Keys : Move around the world

- Mouse : Look around (360° view)

- Space : Move up (fly mode)

- Shift : Move down

- H : Show/hide controls help

- Mouse Scroll : Adjust movement speed

- Add Narrative: Generate an AI story for your world

- Voice Narration: Add immersive audio description

- Interactive Elements: Enable chat-based exploration guide

# Development server

npm run dev

# Production build

npm run build

npm start

# Type checking

npm run type-check

# Linting

npm run lint

# Test specific features

npm run test:api # Test API endpoints

npm run test:generation # Test experience generation# 1. Install Vercel CLI

npm i -g vercel

# 2. Deploy

vercel

# 3. Configure environment variables in Vercel dashboard

# Add all required API keys from .env.local- Storage: Use cloud storage (S3, R2) instead of local

storage/folder - Database: Consider PostgreSQL for experience/enigma persistence

- Caching: Implement Redis for agent state and context management

- Queue: Use BullMQ for long-running 3D generation tasks

FROM node:18-alpine

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY . .

RUN npm run build

EXPOSE 3000

CMD ["npm", "start"]- ✅ AI-Powered 3D World Generation: Text → Photorealistic 3D in ~20 minutes

- ✅ Gaussian Splatting Technology: State-of-the-art neural 3D reconstruction

- ✅ Full Pipeline Automation: Seamless integration of Runway + Vid2Scene

- ✅ Real-time Exploration: Smooth 60fps navigation in generated worlds

- ✅ Modular Enhancement System: Optional narratives, audio, and interactivity

This is a hackathon project - contributions are welcome!

- Fork the repository

- Create your feature branch (

git checkout -b feature/amazing-feature) - Commit your changes (

git commit -m 'Add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

MIT

Built with ❤️ for the Supercell Hackathon

For questions or issues, please open a GitHub issue or contact the team.

Venture - AI Gaussian Splatting World Generator

Transform text into explorable 3D worlds ✨